We are pleased to share that our technical refresh of our US interconnection platform LINX NoVA is now complete. Our strategy to replace all legacy devices at our Internet Exchange Point (IXP) in Northern Virginia was announced last year with Nokia being selected as a technical partner.

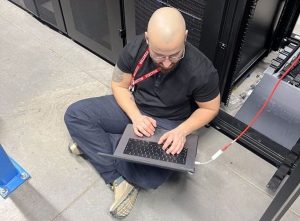

Hear from LINX Senior Network Engineer, Joe Jefford on the work carried out at LINX NoVA from planning through to build and member migration;

***

Introduction

This blog will outline the full process taken to refresh and upgrade the LINX NoVA LAN. After 10 years in service it was time to replace the Juniper MX960 solution with Nokia-SR Linux solution, and add some improvements to the network.

Planning

This network refresh started back in January 2023 with initial network designs, and multiple different options, being considered. The design agreed by our Lead Architects here at LINX was then put forward to the Board for approval, signed off and the kit was ordered.

We completed physical site audits to fully plan the kit install locations, with this being a dual build space was tight in a few of the racks so this was an important step to confirm we could get the new kit installed, and order any additional power or structured cabling required.

We decided to re-locate our rack in Digital Realty, due to space constraints for the new install, as well as rail locations in the rack not being suitable for the new equipment.

The new solution is utilising Nokia 7220 IXR-D3 switches for both core and edge devices, running SR-Linux. These switches are 1U devices with 32x100G port capacity. For 10G member connections we are using 4x10G breakout connections. This has vastly improved the port density and scalability for members at LINX NoVA, especially for future demand of 100G ports.

The optical transport is running on Smartoptics DCP-404s, running at 4x100G via DCP-M40s to allow for future upgrades on the ISLs (Inter-Switch links) if required.

During this work we also planned and executed an upgrade on the server hardware from HP to Dell D350 servers to match the rest of the LINX infrastructure running on Dell.

Network Build

In November 2023 we returned to the LINX NoVA site for the actual install of the new network.

The plan for this was to completely build the new network as a dual build, but with no NNI (network to network interface) to avoid any potential interoperability issues between the two vendors. This also allowed us to completely install, configure and test the new network isolated from the existing, before being ready to move the LINX members over.

The installations went well building and bringing the solution live over a 2 week period. This was completed site by site with the same ‘step by step’ build process we use for these installs doing one task at a time.

- All cagenuts and cable management installed.

- All device rails connected to the devices or rack, then all devices racked.

- All copper management and console cables run in to provide remote reachability. Then all cable runs bundled and run in.

In the completely new digital realty site we also trialled PATCHBOX cage nuts which worked well (and much less chance of catching your fingers!). This also sped up the install in this location. It takes half the time to put them in, and the way they support equipment makes installing front mount only devices a lot easier.

Once a site was installed and fully cabled, we then run through the testing to ensure every patch was to the correct ports and up as expected.

This network was also built on a completely new fibre ring, which (possibly surprisingly!) all come up first time without any issues or running between sites checking cross connects or issues with the fibre.

Once all the physicals were confirmed, the new LAN then goes through our “readiness for service” checks covering all aspects of the LAN and systems that interact with it.

- ensuring automation is working correctly for configuration pushes,

- devices are being monitored as expected,

- weathermaps and all documentation are updated accordingly,

- and many other checks.

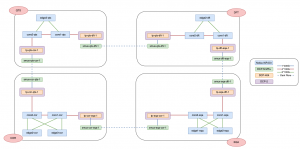

Below shows the new network design:

Member Migrations

In March 2024 we then revisited the NoVA site to complete the member migrations.

Due to there being no NNI between the networks this maintenance was a full ‘lift and shift’ of members from the old LAN to the new. As well as migrating the server hardware for captains, route servers and collectors during the same window, so a fair amount of work.

As we didn’t have set date for the migrations when we did the build we didn’t pre-run any of the new member cabling to the new network during the first visit. This was done to avoid having large numbers of hanging cables in the rack during the period between build and migration, which would make operation of the LAN in the meantime more difficult for our US remote hands teams. So on this visit we had a busy couple of days (early starts helped by the jet lag!) to audit and pre-run new cabling for every member to the new network across the data centre sites. Migration plans were then put together for the moves, and the port moves pre-staged in our automation platform ready for the night.

The migration took place on the night of 28th March starting at 00:00 local time (less fun with the jet lag!).

With 2 LINX engineers on site, plus our remote hands team in the US, and a number of other members of the LINX technical teams working remotely in the UK on the various stages of both the member and server migrations.

These migrations went well with 60+ members across all sites moved successfully within the window, as well as 5 server upgrades.

There was a small automation configuration issue with the moves which we had to implement a work around on during the maintenance, but this didn’t affect proceedings too much.

Member light levels and traffic were all checked over by the remote teams and the maintenance declared a success.

Equipment Removal and Management

After monitoring the network over the weekend we were happy the new network was stable and we could begin the process of removing the old cabling and equipment. This was done as a joint effort with a local data destruction company who were able to remove the heavy 960 chassis, which isn’t the easiest of tasks, and destroy disks and data in their shredder on site. This went well and was completed across the sites over a 2 day period.

The final task was then to move the existing management network links over to the new fibre ring. This took a little bit more running round as we were down to 1 LINX engineer onsite then, and after an emergency Amazon Prime delivery of attenuators (as I didn’t have enough in my bag), I got these links up over the SmartOptics DCP-M40s. Management traffic migrated to the new fibre ring and we were ready for termination of the old fibres.

***

LINX NoVA was launched back in 2014 with the ambition of building a multi data centre, interconnected platform for the internet community in the US. Ten years on, the Internet Exchange Point (IXP) continue to welcome new connected members and data centre partners to the community, but recognised the need to add even further value to networks connected there.

The LINX NoVA network refresh will enhance the capabilities and performance for existing networks connected at the IXP. It will also assist in the deployment and running of LINX’s range of interconnection services available at their London platform. This includes not only peering services, but closed user groups, private vLAN connections and also the exclusive Microsoft Azure Peering Service (MAPS).

< Go Back