After successfully completing the MX10K POCs (proof of concepts) with Juniper Networks in early 2019 we were ready to begin their deployment into the LON1 network.

The main aim of this project was to retire the existing PTX5000 devices in our core and replace them with Mx10008 routers. In doing so, we would also change the way 100G members connect to the network, by having them connect directly to the MX10K devices. This is in contrast to the ‘old’ topology having 100G members mostly connect to HD edges and Provider only devices (PTX5000s) in the core. These changes allowed us to consolidate the network and provide 100G ports at a higher density than before, while also removing the need and upkeep for high density edge to core ISLs (inter-switch links) for the HD edges.

There were also major changes in EQ4 (Equinix LD4) during this project which saw us expand into a new suite in Equinix LD6. As well as a new PoP in THN2 (Telehouse North 2).

During these upgrades we also saw some of the optical transport in the network upgraded and the deployment of the Smartoptics Smart MUX’s for the first time.

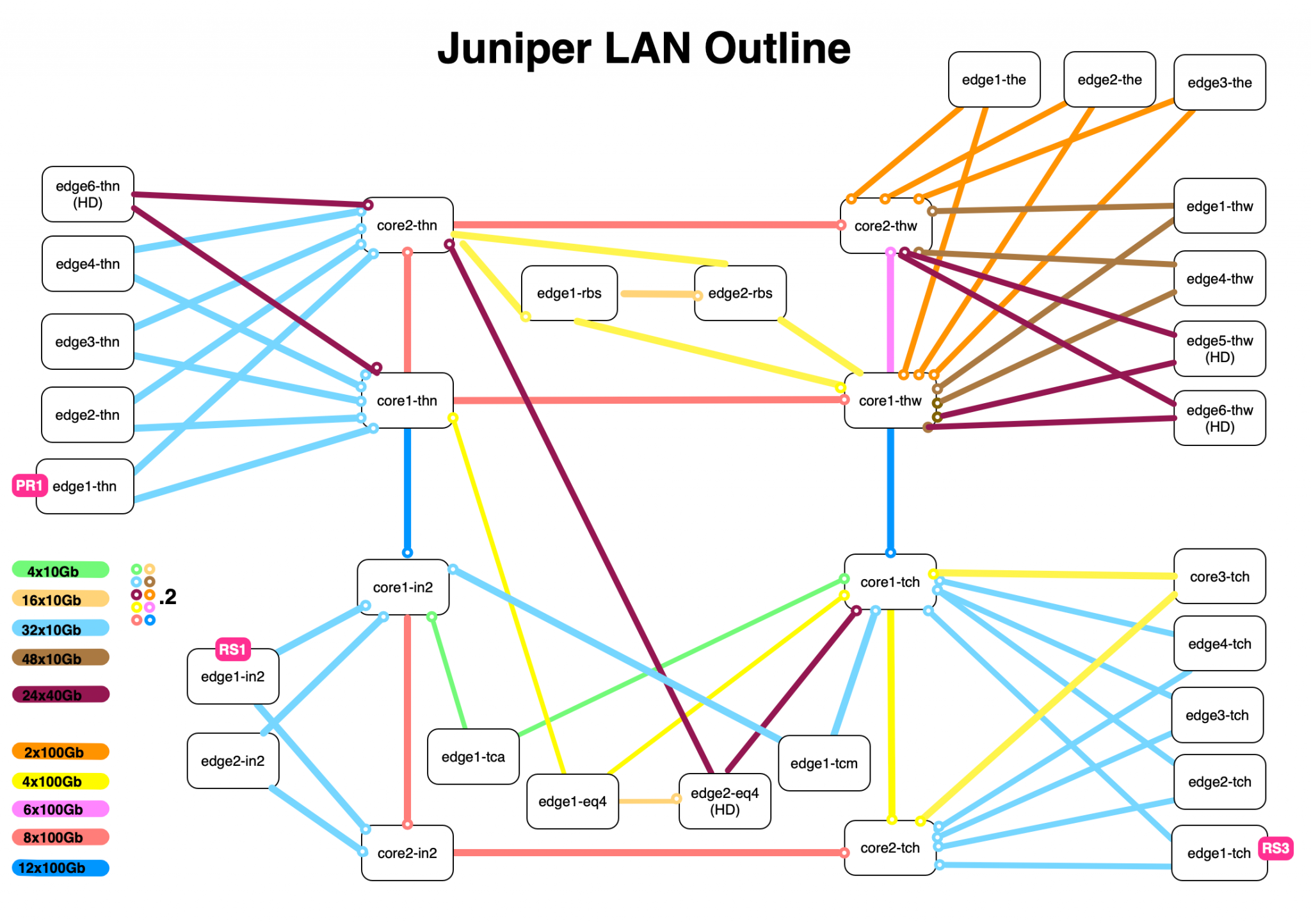

Topology Before

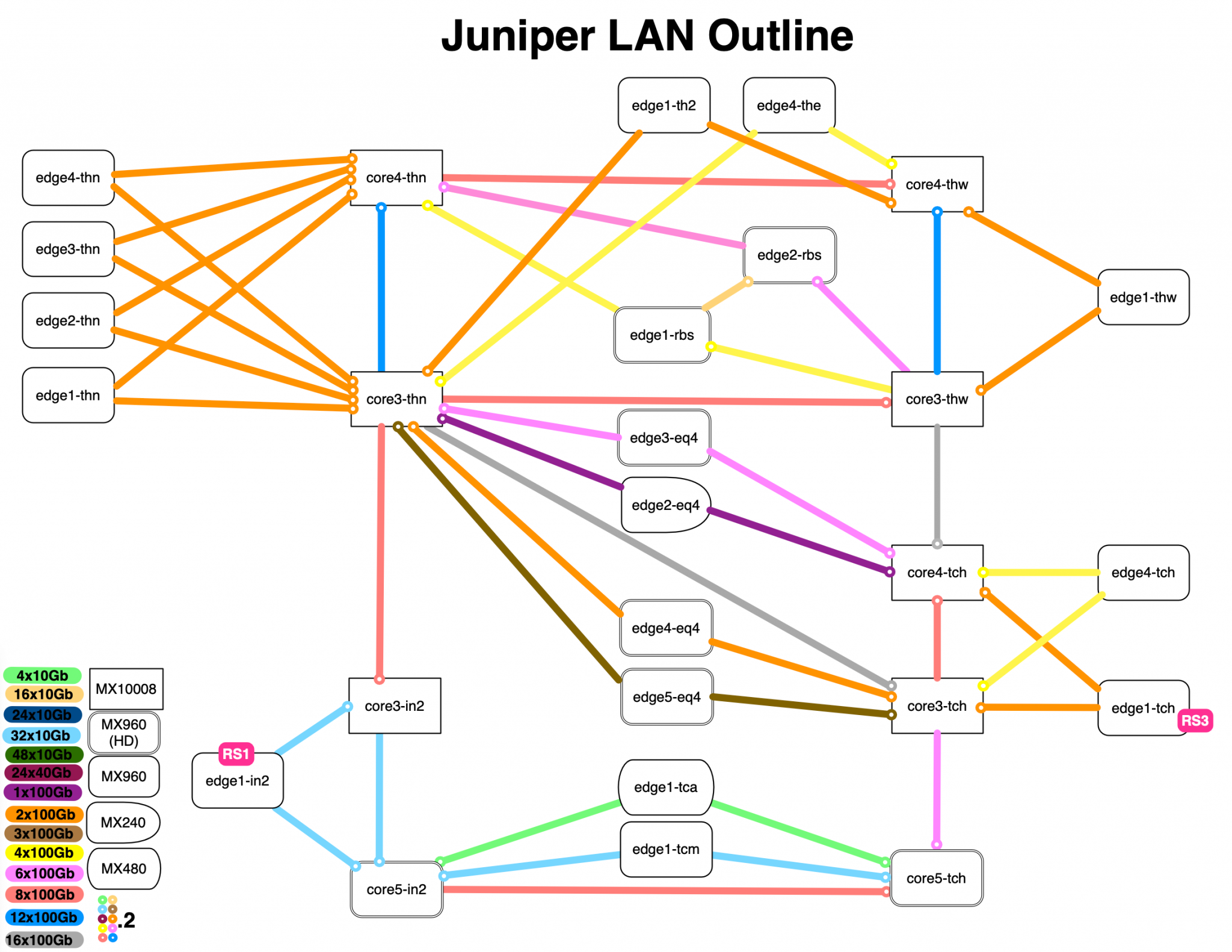

Topology After

As mentioned in the introduction, all core devices were PTX5000s acting as P (provider) devices only, with no members connected. Most 100G members were connected to the HD edges as labelled above, which were MX960s running MPC7 cards for the 100G members, allowing only four 100G ports per card. At other sites we also had 100G members connected to ‘standard’ MX960 devices using MPC4 cards, but this only allowed us two 100G ports per card.

Stage One – North Plane

The first stage of the upgrades, the North Plane, began in early 2019. From the above ‘before’ topology this was the Telehouse sites, as well as RBS (Sovereign House).

Due to the nature of an upgrade of this scale, taking place on a live network, there was a lot of ‘juggling’ of kit and additional rack space required. The very first install was of an MX10K (core3-tch) in TCH (Harbour Exchange). While TCH is in the South Plane this was needed to create the new MX10K core. Initially, core3-tch uplinks were connected to the two PTXs (core1-tch ad core2-tch) which allowed us to move 100G members off the current HD edges, with those MX960s to be re-purposed for the LD6 build-out freeing-up their rack-space for another MX10K (core4-tch). Once this was completed and the MX10K verified with live traffic, the next stop was to build a ‘mini-core’ with MX10Ks with THN (Telehouse North) and THW (Telehouse West) getting an MX10K (core3-thn/thw) each.

It was at this stage we also brought Smartoptics Smart MUX’s live for the first time, for the link between core3-thn and core3-tch. When these core3 devices went live at each site, we were also able to migrate 100G members directly onto the MX10Ks. This allowed us to eventually decommission three edges in THW and two in THN.

Overview of Smartoptics ‘Smart MUX’s’

The Smartoptics solution we implemented in LON1 during these upgrades is their DCP-M solution with PAM4 DWDM transceivers developed by Inphi. These ‘Smart MUX’s’ provide ‘zero touch provisioning’ with the MUX itself providing automatic fibre distance measurement and dispersion compensation, as well as automatic modulation format detection and automatic client and line regulation.

We can also monitor the MUX’s directly providing much greater detail than a standard passive MUX. The scaleability of this solution was also one of the main reasons for the change, allowing fast and easy ISL upgrades through the Smart MUX, without the need to install or maintain additional optical transport devices. This also allowed us to provide the ports at a much lower cost point.

These devices are also only 1U, so from a rack-space point of view, much more efficient that some legacy options.

Stage Two – South Plane

The start of the South Plane upgrades, planned for early 2020, were unfortunately delayed due to data centre restrictions put in place for COVID-19. However, once these were lifted a considerable work was undertaken to complete the project.

This one was done with a slightly different approach to the North Plane, with MX960s installed in both TCH and IN2 (Interxion) becoming core5 at each site. This allowed us to use these cores as direct ‘swaps’ for the remaining PTX devices there providing the required 10G ports for TCA and TCM uplinks, at TCH, without having to use MX10K ports at 4x10G.

At IN2 we did the same for the TCA and TCM uplinks, as well as the edge1/2 uplinks via an MX960 (core5-in2). The only place we have MX10k ports running at 4x10G is in IN2 to provide the edge1/2 uplinks.

Again, the MX10K in IN2 allowed us to migrate 100G members directly to the MX10K. We also took this opportunity to upgrade the IN2 to TCH ISL to Smartoptics and from 6x100G to 8x100G with further increases available.

New PoP’s

As mentioned earlier, during these upgrades we were also able to bring THN2 live as a brand new PoP, as well as implement major changes in EQ4 (Equinix Slough). In addition, we were able to expand into a new suite in LD6, with all 100G and 10G members migrated over. The uplinks to these sites also transferred to connect to the MX10Ks rather than the PTXs.

As you can see from the above ‘after’ topology there have been substantial changes throughout the network. The most notable being the consolidation of edge routers made possible by 100G members connecting directly to the core, reducing overheads on the network related to the upkeep of the HD edges and the associated ISLs.

Other notable upgrades include in the core, with links between THN and THW, as well as TCH upgraded to Smartoptics and 16x100G, and IN2 to 8x100G.

Looking forward, the next plans for the LON1 network will be the implementation of EVPN.

Written by Joe Jefford, Senior Network Engineer

< Go Back